Transcribing Audio With OpenAI / Whisper

Recently, I was listening to a philosophy lecture by Michael Segrue that included some commentary related to T.S. Eliot. I wanted to extract that specific segment because the quotes and ideas were deeply relevant to what I was working on in my Lorde chapter.

In the past, I had transcribed audio to text using various online tools. Most of them offer a few minutes free, then quickly push for a subscription. While that can work in a pinch, this particular case involved rare audio recordings—content not publicly available and accessed through a membership paywall.

Even if these transcription services are trustworthy, I felt it was inappropriate to upload someone else’s protected material. That’s when I turned to a more private, local solution: OpenAI’s Whisper.

Why Whisper?

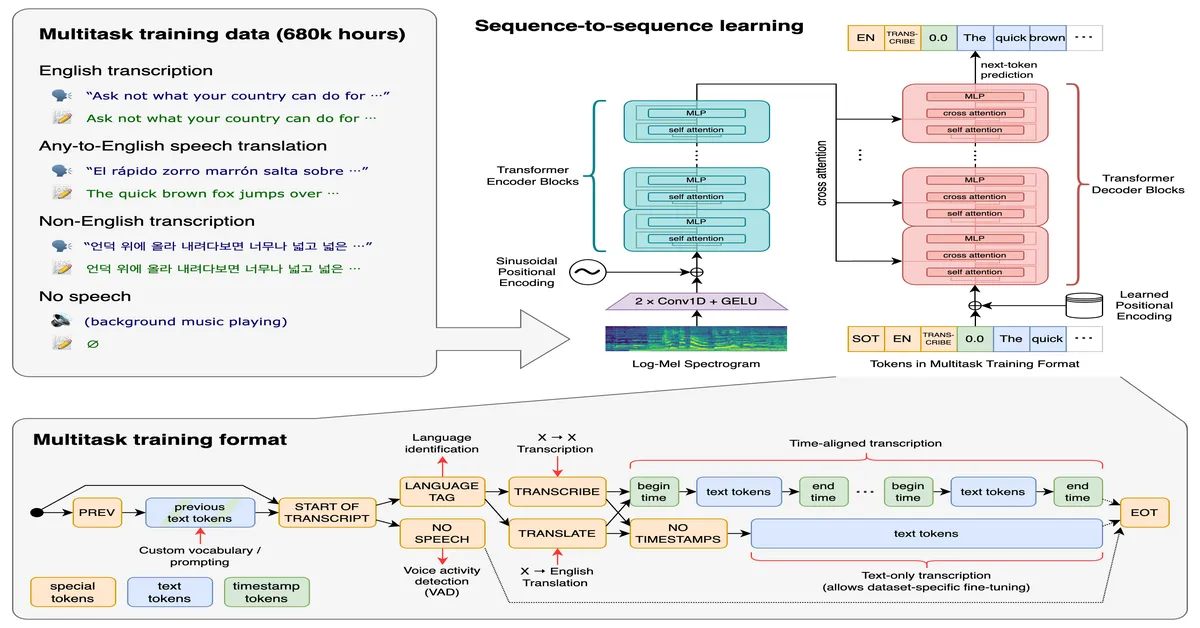

Since I had recently begun exploring AI tools, I discovered that OpenAI’s Whisper model can be downloaded and run locally. This gave me total control over the data. Whisper supports multiple models of varying sizes and accuracy levels, so you can pick one based on your hardware and needs.

Even the fastest model—while less accurate than the large ones—successfully transcribed the Segrue lecture segment I needed. The tradeoff was worth it for data privacy and full control.

This experience reinforced a key difference in how I approach AI: I’m not using it to generate content, but to help organize and extract insights from the content I already have. At some point, I’ll write a post about using local AI models to parse and search through PDF files—a method that’s proving useful for organizing research notes and generating ideas.

This is where I feel the conversation around AI often gets muddied. There’s a knee-jerk reaction among some who conflate all AI with auto-generated content. But there’s a huge difference between using AI as a creative crutch and using it as a research tool.

In my case, Whisper became a quiet but powerful assistant—letting me do the intellectual heavy lifting, while it handled the tedious transcription work behind the scenes.

How I Ran Whisper Locally on macOS

After deciding to handle the transcription locally, I visited the Whisper GitHub page and learned that it’s an open-source project built by OpenAI. The documentation is straightforward, and it gave me confidence that I could run it myself without relying on any third-party services.

I opened Terminal on my MacBook Pro and followed the instructions to install Whisper. I used pip to install it, along with ffmpeg for audio processing:

pip install git+https://github.com/openai/whisper.git

brew install ffmpegFor transcription, I chose the tiny model (the smallest and fastest), since I wanted to process the file quickly and didn’t require high-end accuracy for a simple reference segment.

Step 1: Download the Lecture

I used yt-dlp, a command-line utility that lets you download audio or video from YouTube and other platforms. This made it easy to save the Michael Segrue lecture to my computer:

yt-dlp "https://www.youtube.com/watch?v=VIDEO_ID"Once I had the file, I used MediaHuman Audio Converter (a free GUI tool) to convert it to .mp3 format, which Whisper accepts without issue.

Step 2: Run the Transcription

Back in Terminal, I navigated to the directory containing the audio file and ran the following command to start the transcription process:

whisper segbib.mp3 --model tiny > segbib.txtThis command runs Whisper using the tiny model on the file segbib.mp3, and outputs the transcript directly to a text file named segbib.txt. The simplicity of this command—and the fact that it worked so smoothly—was impressive.